AWS News Blog

Yemeksepeti: Our Shift to Serverless Architecture

|

|

September 8, 2021: Amazon Elasticsearch Service has been renamed to Amazon OpenSearch Service. See details.

AWS Community Hero Onur Salk wrote the guest post below in order to tell you how he helped his employer to move to a serverless architecture.

AWS Community Hero Onur Salk wrote the guest post below in order to tell you how he helped his employer to move to a serverless architecture.

— Jeff;

I’m Onur Salk, AWS Community Hero, AWS Certified Solutions Architect – Professional, and organizer of the AWS user group in Turkey. As a Hero, I like to share my AWS experience and knowledge with the community on my personal blog and through meetups with the community. Today I want to share the story behind Yemeksepeti and our shift to serverless architecture.

The Story Behind Yemeksepeti

Yemeksepeti is the biggest online food ordering company in Turkey. It lets users place food orders from affiliated network restaurants without charging any extra fees. At Yemeksepeti, we needed to set up a globally distributed service that is scalable, high-performing, and cost-effective. Our belief is that by designing a serverless architecture, we won’t have to worry about managing our servers and can remove a lot of operational burdens from our team. This means we can focus on running our code at scale.

Yemeksepeti is the biggest online food ordering company in Turkey. It lets users place food orders from affiliated network restaurants without charging any extra fees. At Yemeksepeti, we needed to set up a globally distributed service that is scalable, high-performing, and cost-effective. Our belief is that by designing a serverless architecture, we won’t have to worry about managing our servers and can remove a lot of operational burdens from our team. This means we can focus on running our code at scale.

At Yemeksepeti.com, we developed a real-time discount system called Joker about four years ago. The purpose of this system is to suggest discounts to customers that they normally cannot find for restaurants. The original Joker platform was developed in .NET and then integrated with the website and mobile devices using its REST API. We were asked to open the platform’s API to our sister companies operating in 34 countries, so that they can also provide real-time Joker discounts to their customers.

Initially, we thought we would share our code and let them integrate their applications. However, most of other countries were using different technology stack (programming languages, database, and so on). Although using our code might accelerate their development at first, they would have to maintain an unfamiliar system. We needed to find an integration method that was easier to implement and cheaper to maintain.

Our Requirements

This was a global project, and these were our five focus areas:

- Ease of management

- High availability

- Scalability

- Use in several regions

- Cost advantage

We evaluated these focus areas against several different processing models and came up with the following matrix:

| Ease of Management | High Availability | Scalability | Use in Several Regions | Cost Advantage | |

| IaaS

We could spin up some EC2 instances running IIS on top of Microsoft Windows Server and connected to an RDS DB instance.

|

No. We need to take care of our servers. | Yes. We can distribute our servers to different AZs. | Yes. We can use Auto Scaling | Yes. We can use AMIs and copy between regions | Partially. There will be license fees and costs for running EC2 instances . |

| PaaS

We could use AWS Elastic Beanstalk.

|

Partially. We need to take care of our servers. | Yes. We can distribute our servers to different AZs. | Yes. We can use Auto Scaling. | Yes. We can use environment configurations, AMIs, etc. | Partially. There will be license fees and costs for running EC2 instances. |

| FaaS

We could use AWS Lambda.

|

Yes. AWS takes care of the services. | Yes. It is already highly available | Yes. It performs at any scale | Yes. We can export/import/upload our configurations easily. | Yes. There are no licenses and we pay only for what we use. |

We decided to use Faas (Functions as a Service). We started our project in the Europe (Ireland) regions using the following services:

- Amazon Virtual Private Cloud (Amazon VPC)

- Amazon API Gateway

- AWS Lambda

- Amazon Relational Database Service (Amazon RDS)

- Amazon Simple Storage Service (Amazon S3)

- Amazon CloudWatch

- Amazon Elasticsearch Service

Architecture

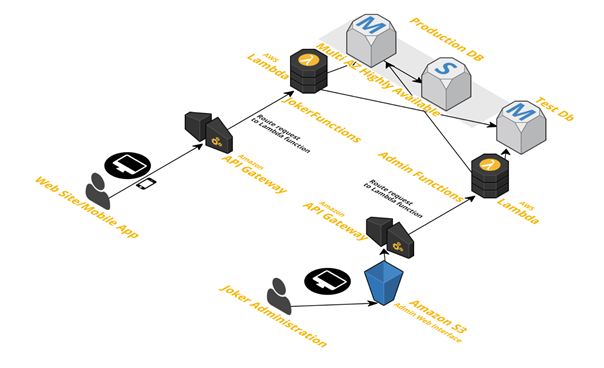

Our architecture looks like this:

Amazon VPC: We use Amazon VPC to launch our resources in our private network.

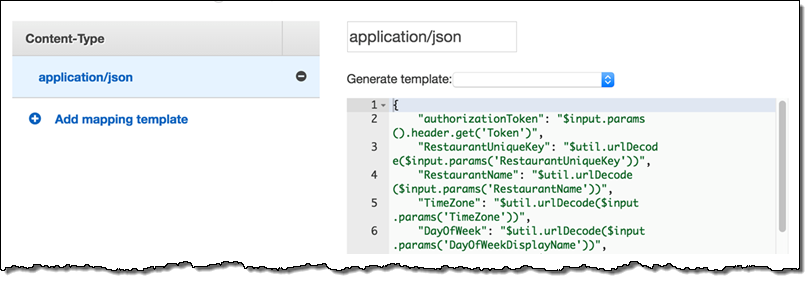

Amazon API Gateway: During the development phase, we started to develop the service in the Europe (Ireland) region. At that time, AWS Lambda was not available in Europe (Frankfurt). We created two APIs: one for web integration and the other for the admin interface. We used custom authorizers with JSON Web Tokens (JWT) to enable token-based authorization for our APIs. We used mapping templates to pass our variables to our Lambda functions.

In the development phase, there was only a test stage for each API.

During the production phase, AWS Lambda became available in Frankfurt. We decided to move the service there to benefit from low latency access from Turkey. We used the API Gateway Export API feature to export our configuration in Swagger format, and then imported it into Frankfurt. (Before the import, we changed the region definitions in the exported file to eu-central-1.) After that, we created a production stage and used stage variables to parameterize our database definitions of the Amazon RDS instances (like host, username, and so on). We also wanted to use our custom domain name. After we bought an SSL certificate for our domain, we created a custom domain name in the Amazon API Gateway console and created an alias for our CloudFront distribution name (Amazon API Gateway uses Amazon CloudFront in the background). Finally, we created an IAM role to enable Amazon CloudWatch logging for API calls, latency, and more.

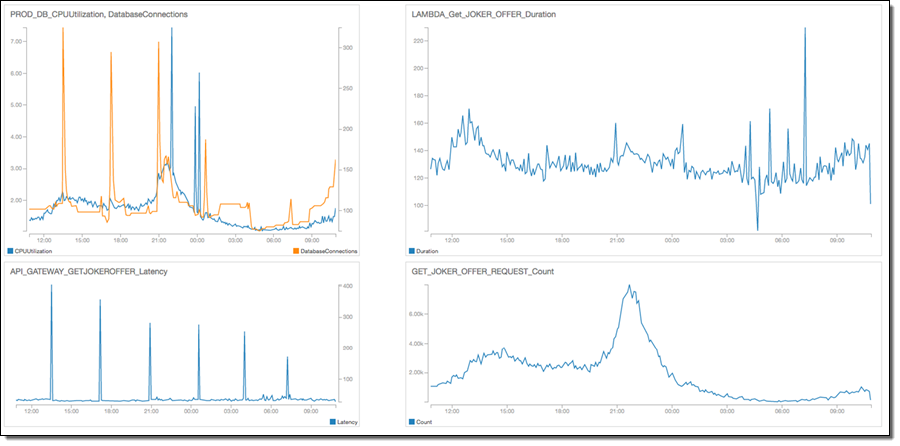

Metrics for Get_Joker_offer resource:

AWS Lambda: During the development phase, we used Python to develop our service and created 65 functions for integrating our API methods and scheduled tasks using CloudWatch Events Lambda triggers. Lambda VPC integration became available during the production phase, so we uploaded our functions to the Frankfurt region and integrated them with VPC.

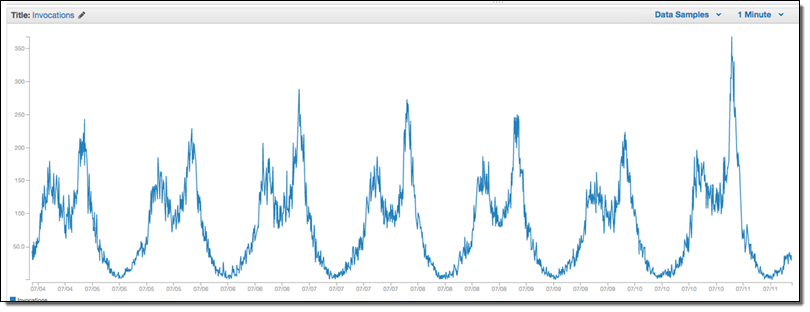

Invocation count of Get_joker_offer Lambda function (The peaks correspond to lunch and dinner times (when people are hungry)):

Amazon RDS: During the development phase, we chose to use Amazon RDS for PostgreSQL. We created a single-AZ RDS instance to test our service. During the production phase, we needed to move our database because we migrated our APIs and functions to Frankfurt. We created a snapshot of our instance and using the Copy snapshot feature of RDS, we successfully moved our database. We launched two instances in our VPC: a multi-AZ instance for production and a single-AZ instance for test purposes. In our API stage variables, we defined the endpoint names of our RDS instances to map the staging to the appropriate instance. We also enabled automated backups for both instances.

Amazon S3: The Joker platform has an admin panel that’s used for managing and reporting Joker offers. To host this administration interface, which is basically a Single Page Application (SPA) with AngularJS, we used the static website hosting feature of Amazon S3. All of the logic and functionality is provided by methods running on Lambda, so we didn’t need a server for the admin interface:

Amazon CloudWatch: We use the service to monitor the usage of our important assets and to get alerts if something goes wrong. We created a custom dashboard to monitor the CPU of our production database, connection count, critical API latencies and function counts and durations.

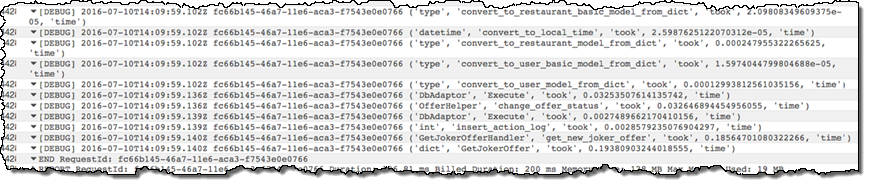

In our Python code, we log the durations of each inner method in CloudWatch to track performance and find any bottlenecks:

Here’s our CloudWatch dashboard:

Amazon ElasticSearch: During the development phase, Cloudwatch Logs streaming to Amazon ES became available in the Ireland region. Using this feature, we created a Kibana dashboard to monitor some other metrics from the logs we generate from our code. As soon as Amazon ES is available in the Frankfurt region, we will use it again.

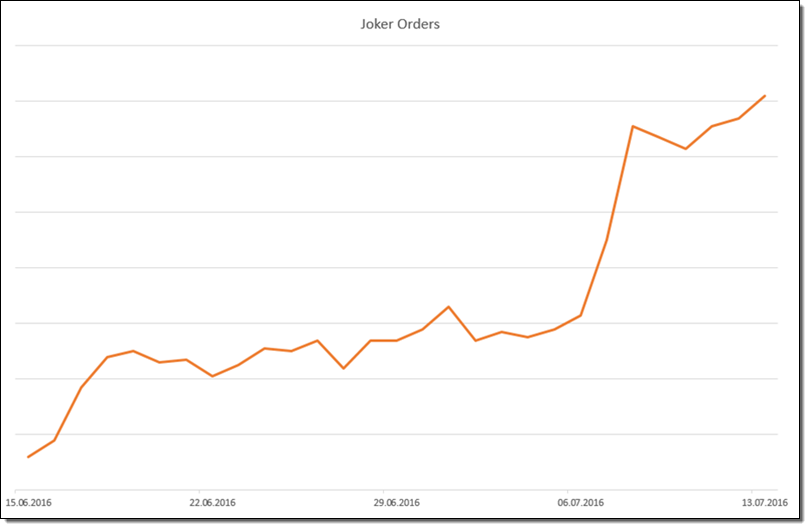

Initial Results

The Joker system is in production now, as a pilot for a small region of a country. As you can see from the following chart, the growth of the number of orders is promising. By leveraging serverless architecture, we didn’t have to install and manage an operating system and dependencies. Using Amazon API Gateway, AWS Lambda, Amazon S3, and Amazon RDS, our architecture runs in a highly available environment. We don’t need to learn and manage any master-slave replication features or third-party tools. As our service gets more requests, AWS Lambda adds more Lambda instance, so it runs at any scale. We are able to copy our service to another region using the features of AWS services as we did before going into production. Finally, we don’t run any servers, so we benefit from the cost advantage of serverless architecture.

Here is a representation of the number of orders placed through Joker:

What’s Next

We hope this service will spread to all 34 of the sister companies within Delivery Hero Holding. As the service is rolled out globally, we will deploy to other AWS regions. We plan to choose the region nearest to the company. To optimize our costs, we will purchase reserved instances for our RDS instances. Also, as we monitor our inner methods’ duration, we can re-factor and optimize our code and so that we can decrease our Lambda functions’ execution times.

We believe the future of the cloud is FaaS. We would like to experiment more as other features, services, and functions become available.

As an AWS Community Hero, I look forward to sharing the Yemeksepeti story with the AWS user group in Turkey. I’d like to help people explore and leverage serverless architecture.

— Onur Salk