AWS News Blog

New – AWS Deep Learning Containers

|

|

We want to make it as easy as possible for you to learn about deep learning and to put it to use in your applications. If you know how to ingest large datasets, train existing models, build new models, and to perform inferences, you’ll be well-equipped for the future!

New Deep Learning Containers

Today I would like to tell you about the new AWS Deep Learning Containers. These Docker images are ready to use for deep learning training or inferencing using TensorFlow or Apache MXNet, with other frameworks to follow. We built these containers after our customers told us that they are using EKS and Amazon ECS to deploy their TensorFlow workloads to the cloud, and asked us to make that task as simple and straightforward as possible. While we were at it, we optimized the images for use on AWS with the goal of reducing training time and increasing inferencing performance.

The images are pre-configured and validated so that you can focus on deep learning, setting up custom environments and workflows on Amazon Elastic Container Service (Amazon ECS), Amazon Elastic Kubernetes Service (Amazon EKS), and Amazon Elastic Compute Cloud (Amazon EC2) in minutes! You can find them in AWS Marketplace and Elastic Container Registry, and use them at no charge. The images can be used as-is, or can be customized with additional libraries or packages.

Multiple Deep Learning Containers are available, with names based on the following factors (not all combinations are available):

- Framework – TensorFlow or MXNet.

- Mode – Training or Inference. You can train on a single node or on a multi-node cluster.

- Environment – CPU or NVIDIA GPU.

- Python Version – 2.7 or 3.6.

- Distributed Training – Availability of the Horovod framework.

- Operating System – Ubuntu 16.04.

Using Deep Learning Containers

In order to put an AWS Deep Learning Container to use, I create an Amazon ECS cluster with an NVIDIA GPU-powered p2.8xlarge instance:

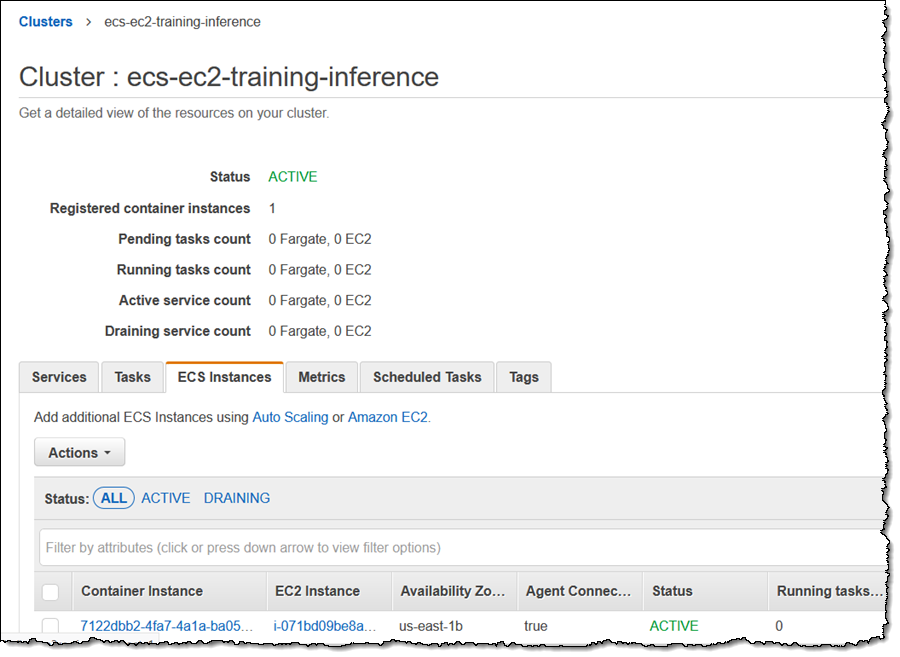

I verify that the cluster is running, and check that the ECS Container Agent is active:

Then I create a task definition in a text file (gpu_task_def.txt):

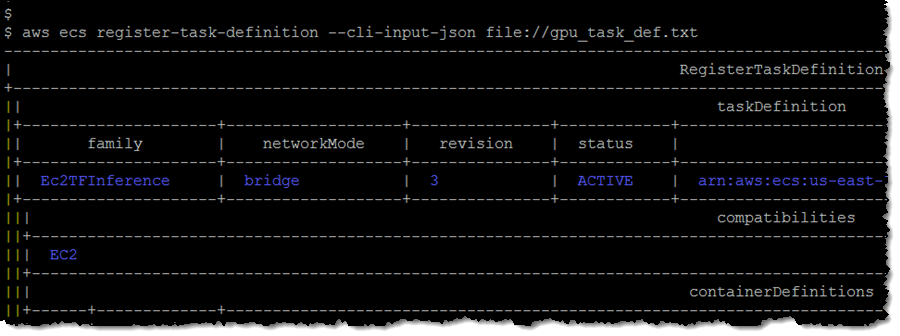

I register the task definition and capture the revision number (3):

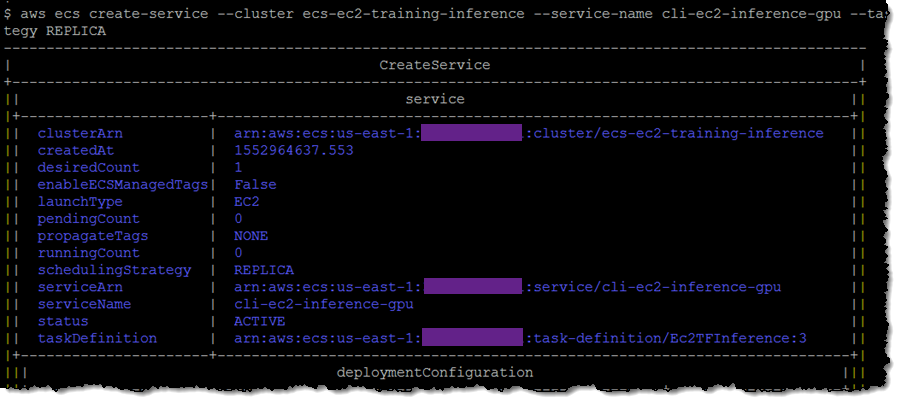

Next, I create a service using the task definition and revision number:

Next, I create a service using the task definition and revision number:

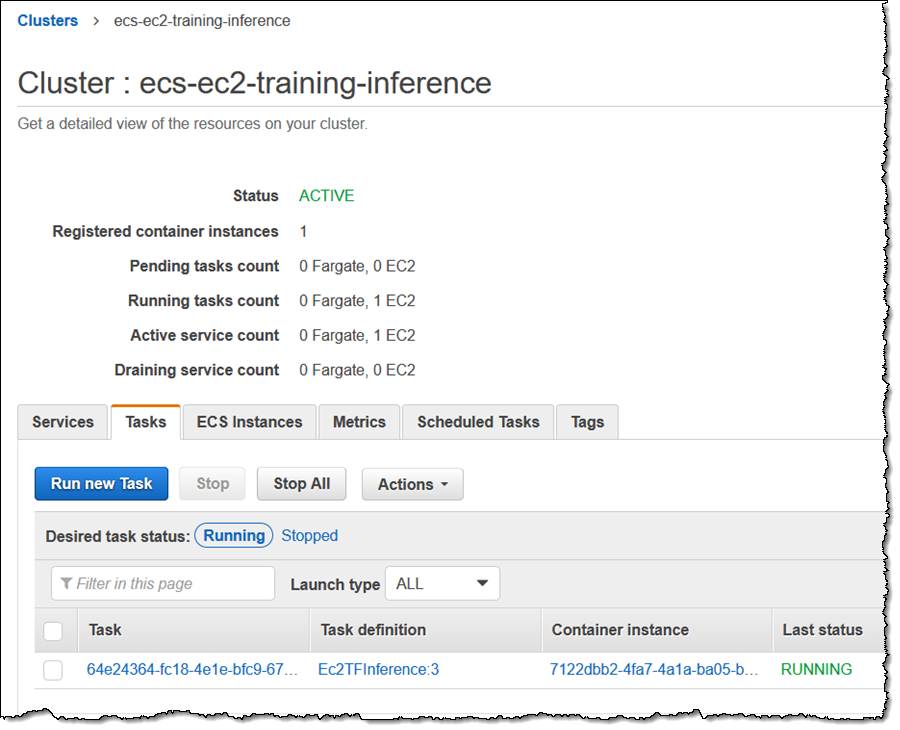

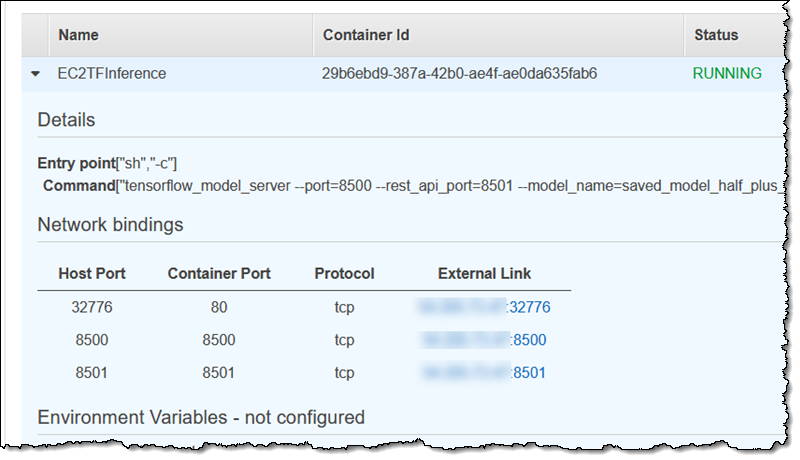

I use the console to navigate to the task:

Then I find the external binding for port 8501:

Then I run three inferences (this particular model was trained on the function y = ax + b, with a = 0.5 and b = 2):

As you can see, the inference predicted the values 2.5, 3.0, and 4.5 when given inputs of 1.0, 2.0, and 5.0. This is a very, very simple example but it shows how you can use a pre-trained model to perform inferencing in ECS via the new Deep Learning Containers. You can also launch a model for training purposes, perform the training, and then run some inferences.

— Jeff;